1. Introduction

The Orientation Sensor API extends the Generic Sensor API [GENERIC-SENSOR] to provide generic information describing the device’s physical orientation in relation to a three dimensional Cartesian coordinate system.

The AbsoluteOrientationSensor class inherits from the OrientationSensor interface and

describes the device’s physical orientation in relation to the Earth’s reference coordinate system.

Other subclasses describe the orientation in relation to other stationary directions, such as true north, or non stationary directions, like in relation to a devices own z-position, drifting towards its latest most stable z-position.

The data provided by the OrientationSensor subclasses are similar to data from DeviceOrientationEvent, but the Orientation Sensor API has the following significant differences:

-

The Orientation Sensor API represents orientation data in WebGL-compatible formats (quaternion, rotation matrix).

-

The Orientation Sensor API satisfies stricter latency requirements.

-

Unlike

DeviceOrientationEvent, theOrientationSensorsubclasses explicitly define which low-level motion sensors are used to obtain the orientation data, thus obviating possible interoperability issues. -

Instances of

OrientationSensorsubclasses are configurable viaSensorOptionsconstructor parameter.

2. Use Cases and Requirements

The use cases and requirements are discussed in the Motion Sensors Explainer document.

3. Examples

const sensor= new AbsoluteOrientationSensor(); const mat4= new Float32Array( 16 ); sensor. start(); sensor. onerror= event=> console. log( event. error. name, event. error. message); sensor. onreading= () => { sensor. populateMatrix( mat4); };

const sensor= new AbsoluteOrientationSensor({ frequency: 60 }); const mat4= new Float32Array( 16 ); sensor. start(); sensor. onerror= event=> console. log( event. error. name, event. error. message); function draw( timestamp) { window. requestAnimationFrame( draw); try { sensor. populateMatrix( mat4); } catch ( e) { // mat4 has not been updated. } // Drawing... } window. requestAnimationFrame( draw);

4. Security and Privacy Considerations

There are no specific security and privacy considerations beyond those described in the Generic Sensor API [GENERIC-SENSOR].

5. Model

The OrientationSensor class extends the Sensor class and provides generic interface

representing device orientation data.

To access the Orientation Sensor sensor type’s latest reading, the user agent must invoke request sensor access abstract operation for each of the low-level sensors used by the concrete orientation sensor. The table below describes mapping between concrete orientation sensors and permission tokens defined by low-level sensors.

| OrientationSensor sublass | Permission tokens |

|---|---|

AbsoluteOrientationSensor

| "accelerometer", "gyroscope", "magnetometer"

|

RelativeOrientationSensor

| "accelerometer", "gyroscope"

|

A latest reading for a Sensor of Orientation Sensor sensor type includes an entry whose key is "quaternion" and whose value contains a four element list.

The elements of the list are equal to components of a unit quaternion [QUATERNIONS] [Vx * sin(θ/2), Vy * sin(θ/2), Vz * sin(θ/2), cos(θ/2)] where V is

the unit vector (whose elements are Vx, Vy, and Vz) representing the axis of rotation, and θ is the rotation angle about the axis defined by the unit vector V.

Note: The quaternion components are arranged in the list as [q1, q2, q3, q0] [QUATERNIONS], i.e. the components representing the vector part of the quaternion go first and the scalar part component which is equal to cos(θ/2) goes after. This order is used for better compatibility with the most of the existing WebGL frameworks, however other libraries could use a different order when exposing quaternion as an array, e.g. [q0, q1, q2, q3].

The concrete OrientationSensor subclasses that are created through sensor-fusion of the low-level motion sensors are presented in the table below:

| OrientationSensor sublass | Low-level motion sensors |

|---|---|

AbsoluteOrientationSensor

| Accelerometer, Gyroscope, Magnetometer

|

RelativeOrientationSensor

| Accelerometer, Gyroscope

|

Note: Accelerometer, Gyroscope and Magnetometer low-level sensors are defined in [ACCELEROMETER], [GYROSCOPE], and [MAGNETOMETER] specifications respectively. The sensor fusion is platform specific and can happen in software or hardware, i.e.

on a sensor hub.

AbsoluteOrientationSensor before

calling start().

const sensor= new AbsoluteOrientationSensor(); Promise. all([ navigator. permissions. query({ name: "accelerometer" }), navigator. permissions. query({ name: "magnetometer" }), navigator. permissions. query({ name: "gyroscope" })]) . then( results=> { if ( results. every( result=> result. state=== "granted" )) { sensor. start(); ... } else { console. log( "No permissions to use AbsoluteOrientationSensor." ); } });

Another approach is to simply call start() and subscribe to onerror event handler.

const sensor= new AbsoluteOrientationSensor(); sensor. start(); sensor. onerror= event=> { if ( event. error. name=== 'SecurityError' ) console. log( "No permissions to use AbsoluteOrientationSensor." ); };

5.1. The AbsoluteOrientationSensor Model

The AbsoluteOrientationSensor class is a subclass of OrientationSensor which represents

the Absolute Orientation Sensor.

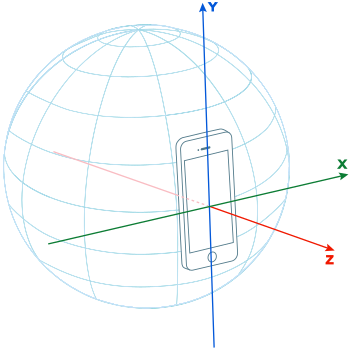

For the absolute orientation sensor the value of latest reading["quaternion"] represents the rotation of a device’s local coordinate system in relation to the Earth’s reference coordinate system defined as a three dimensional Cartesian coordinate system (x, y, z), where:

-

x-axis is a vector product of y.z that is tangential to the ground and points east,

-

y-axis is tangential to the ground and points towards magnetic north, and

-

z-axis points towards the sky and is perpendicular to the plane made up of x and y axes.

The device’s local coordinate system is the same as defined for the low-level motion sensors. It can be either the device coordinate system or the screen coordinate system.

Note: Figure below represents the case where device’s local coordinate system and the Earth’s reference coordinate system are aligned, therefore, orientation sensor’s latest reading would represent 0 (rad) [SI] rotation about each axis.

5.2. The RelativeOrientationSensor Model

The RelativeOrientationSensor class is a subclass of OrientationSensor which represents the Relative Orientation Sensor.

For the relative orientation sensor the value of latest reading["quaternion"] represents the rotation of a device’s local coordinate system in relation to a stationary reference coordinate system. The stationary reference coordinate system may drift due to the bias introduced by the gyroscope sensor, thus, the rotation value provided by the sensor, may drift over time.

The stationary reference coordinate system is defined as an inertial three dimensional Cartesian coordinate system that remains stationary as the device hosting the sensor moves through the environment.

The device’s local coordinate system is the same as defined for the low-level motion sensors. It can be either the device coordinate system or the screen coordinate system.

Note: The relative orientation sensor data could be more accurate than the one provided by absolute orientation sensor, as the sensor is not affected by magnetic fields.

6. API

6.1. The OrientationSensor Interface

typedef (Float32Array or Float64Array or DOMMatrix ); [RotationMatrixType SecureContext ,Exposed =Window ]interface :OrientationSensor Sensor {readonly attribute FrozenArray <double >?;quaternion void (populateMatrix RotationMatrixType ); };targetMatrix enum {OrientationSensorLocalCoordinateSystem ,"device" };"screen" dictionary :OrientationSensorOptions SensorOptions {OrientationSensorLocalCoordinateSystem = "device"; };referenceFrame

6.1.1. OrientationSensor.quaternion

Returns a four-element FrozenArray whose elements contain the components

of the unit quaternion representing the device orientation.

In other words, this attribute returns the result of invoking get value from latest reading with

6.1.2. OrientationSensor.populateMatrix()

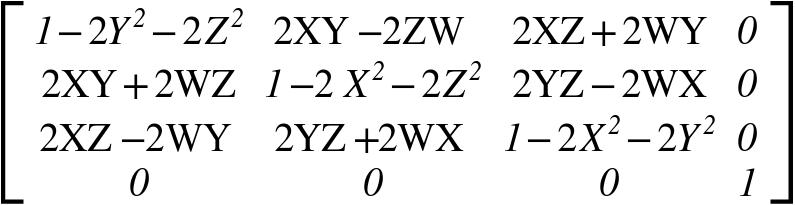

The populateMatrix() method populates the given object with rotation matrix

which is converted from the value of latest reading["quaternion"] [QUATCONV], as shown below:

where:

-

W = cos(θ/2)

-

X = Vx * sin(θ/2)

-

Y = Vy * sin(θ/2)

-

Z = Vz * sin(θ/2)

The rotation matrix is flattened in targetMatrix object according to the column-major order, as described in populate rotation matrix algorighm.

populateMatrix() method must

run these steps or their equivalent:

-

If targetMatrix is not of type defined by

RotationMatrixTypeunion, throw a "TypeError" exception and abort these steps. -

If targetMatrix is of type

Float32ArrayorFloat64Arraywith a size less than sixteen, throw a "TypeError" exception and abort these steps. -

Let quaternion be the result of invoking get value from latest reading with

this and "quaternion" as arguments. -

If quaternion is

null, throw a "NotReadableError"DOMExceptionand abort these steps. -

Let x be the value of quaternion[0]

-

Let y be the value of quaternion[1]

-

Let z be the value of quaternion[2]

-

Let w be the value of quaternion[3]

-

If targetMatrix is of

Float32ArrayorFloat64Arraytype, run these sub-steps:-

Set targetMatrix[0] = 1 - 2 * y * y - 2 * z * z

-

Set targetMatrix[1] = 2 * x * y - 2 * z * w

-

Set targetMatrix[2] = 2 * x * z + 2 * y * w

-

Set targetMatrix[3] = 0

-

Set targetMatrix[4] = 2 * x * y + 2 * z * w

-

Set targetMatrix[5] = 1 - 2 * x * x - 2 * z * z

-

Set targetMatrix[6] = 2 * y * z - 2 * x * w

-

Set targetMatrix[7] = 0

-

Set targetMatrix[8] = 2 * x * z - 2 * y * w

-

Set targetMatrix[9] = 2 * y * z + 2 * x * w

-

Set targetMatrix[10] = 1 - 2 * x * x - 2 * y * y

-

Set targetMatrix[11] = 0

-

Set targetMatrix[12] = 0

-

Set targetMatrix[13] = 0

-

Set targetMatrix[14] = 0

-

Set targetMatrix[15] = 1

-

-

If targetMatrix is of

DOMMatrixtype, run these sub-steps:-

Set targetMatrix.m11 = 1 - 2 * y * y - 2 * z * z

-

Set targetMatrix.m12 = 2 * x * y - 2 * z * w

-

Set targetMatrix.m13 = 2 * x * z + 2 * y * w

-

Set targetMatrix.m14 = 0

-

Set targetMatrix.m21 = 2 * x * y + 2 * z * w

-

Set targetMatrix.m22 = 1 - 2 * x * x - 2 * z * z

-

Set targetMatrix.m23 = 2 * y * z - 2 * x * w

-

Set targetMatrix.m24 = 0

-

Set targetMatrix.m31 = 2 * x * z - 2 * y * w

-

Set targetMatrix.m32 = 2 * y * z + 2 * x * w

-

Set targetMatrix.m33 = 1 - 2 * x * x - 2 * y * y

-

Set targetMatrix.m34 = 0

-

Set targetMatrix.m41 = 0

-

Set targetMatrix.m42 = 0

-

Set targetMatrix.m43 = 0

-

Set targetMatrix.m44 = 1

-

6.2. The AbsoluteOrientationSensor Interface

[SecureContext ,Exposed =Window ]interface :AbsoluteOrientationSensor OrientationSensor {(constructor optional OrientationSensorOptions = {}); };sensorOptions

To construct an AbsoluteOrientationSensor object the user agent must invoke the construct an orientation sensor object abstract operation for the AbsoluteOrientationSensor interface.

Supported sensor options for AbsoluteOrientationSensor are

"frequency" and "referenceFrame".

6.3. The RelativeOrientationSensor Interface

[SecureContext ,Exposed =Window ]interface :RelativeOrientationSensor OrientationSensor {(constructor optional OrientationSensorOptions = {}); };sensorOptions

To construct a RelativeOrientationSensor object the user agent must invoke the construct an orientation sensor object abstract operation for the RelativeOrientationSensor interface.

Supported sensor options for RelativeOrientationSensor are

"frequency" and "referenceFrame".

7. Abstract Operations

7.1. Construct an Orientation Sensor object

- input

-

orientation_interface, an interface identifier whose inherited interfaces contains

OrientationSensor.options, a

OrientationSensorOptionsobject. - output

-

An

OrientationSensorobject.

-

Let allowed be the result of invoking check sensor policy-controlled features with the interface identified by orientation_interface.

-

If allowed is false, then:

-

Let orientation be a new instance of the interface identified by orientation_interface.

-

Invoke initialize a sensor object with orientation and options.

-

If options.

referenceFrameis "screen", then:-

Define local coordinate system for orientation as the screen coordinate system.

-

-

Otherwise, define local coordinate system for orientation as the device coordinate system.

-

Return orientation.

8. Automation

This section extends the automation section defined in the Generic Sensor API [GENERIC-SENSOR] to provide mocking information about the device’s physical orientation in relation to a three dimensional Cartesian coordinate system for the purposes of testing a user agent’s implementation ofAbsoluteOrientationSensor and RelativeOrientationSensor APIs.

8.1. Mock Sensor Type

The AbsoluteOrientationSensor class has an associated mock sensor type which is "absolute-orientation", its mock sensor reading values dictionary is defined as follows:

dictionary {AbsoluteOrientationReadingValues required FrozenArray <double >?; };quaternion

The RelativeOrientationSensor class has an associated mock sensor type which is "relative-orientation", its mock sensor reading values dictionary is defined as follows:

dictionary :RelativeOrientationReadingValues AbsoluteOrientationReadingValues { };

9. Acknowledgements

Tobie Langel for the work on Generic Sensor API.

10. Conformance

Conformance requirements are expressed with a combination of descriptive assertions and RFC 2119 terminology. The key words "MUST", "MUST NOT", "REQUIRED", "SHALL", "SHALL NOT", "SHOULD", "SHOULD NOT", "RECOMMENDED", "MAY", and "OPTIONAL" in the normative parts of this document are to be interpreted as described in RFC 2119. However, for readability, these words do not appear in all uppercase letters in this specification.

All of the text of this specification is normative except sections explicitly marked as non-normative, examples, and notes. [RFC2119]

A conformant user agent must implement all the requirements listed in this specification that are applicable to user agents.

The IDL fragments in this specification must be interpreted as required for conforming IDL fragments, as described in the Web IDL specification. [WEBIDL]